Uncertainty-Aware Deep Neural Representations for Visual Analysis of Vector Field Data

Atul Kumar - Indian Institute of Technology Kanpur , Kanpur, India

Siddharth Garg - Indian Institute of Technology Kanpur , Kanpur , India

Soumya Dutta - Indian Institute of Technology Kanpur (IIT Kanpur), Kanpur, India

Screen-reader Accessible PDF

Download preprint PDF

Room: Palma Ceia I

2024-10-16T12:42:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T12:42:00Z

Fast forward

Full Video

Keywords

Implicit Neural Network, Uncertainty, Monte Carlo Dropout, Deep Ensemble, Vector Field, Visualization, Deep Learning.

Abstract

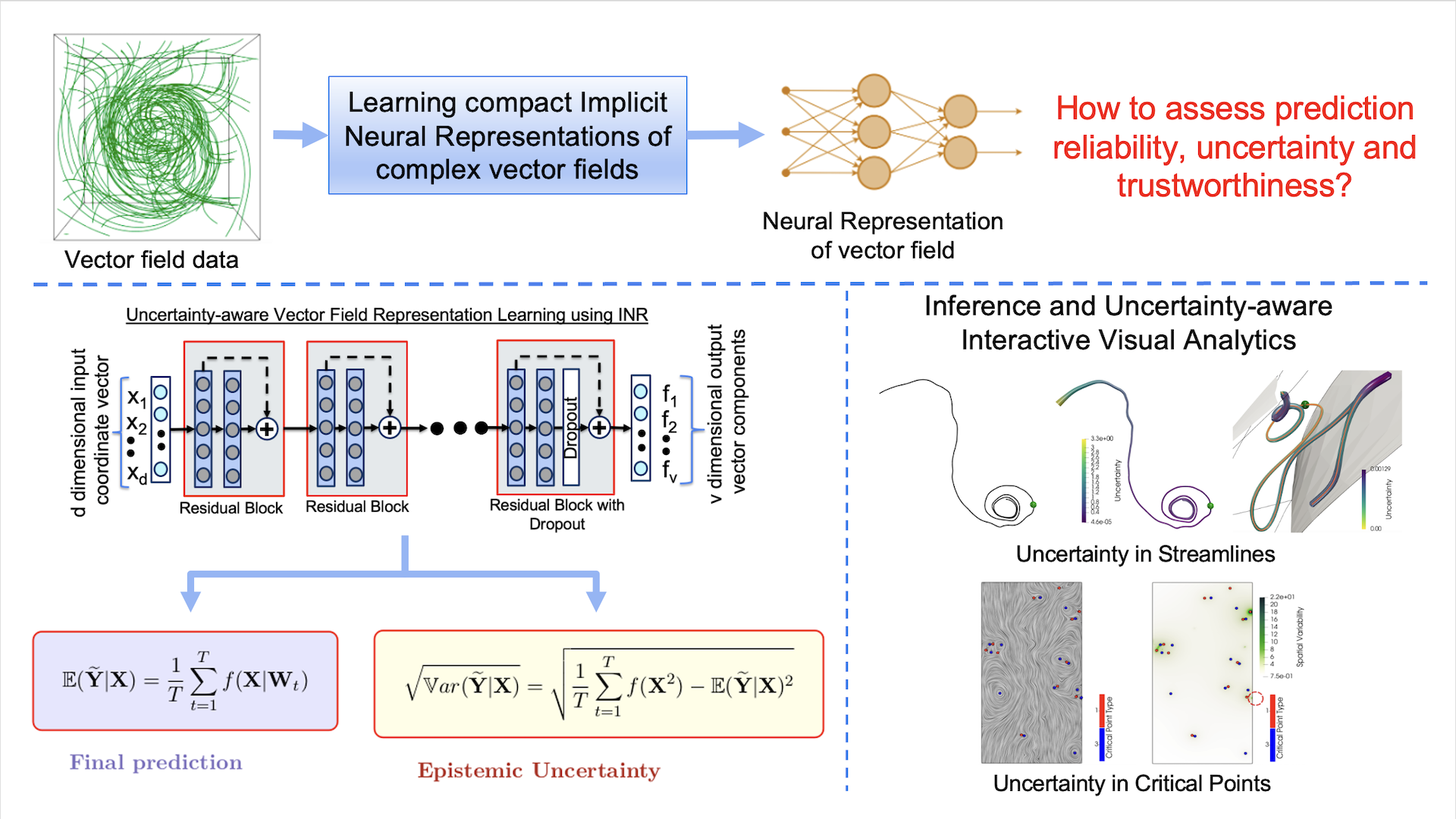

The widespread use of Deep Neural Networks (DNNs) has recently resulted in their application to challenging scientific visualization tasks. While advanced DNNs demonstrate impressive generalization abilities, understanding factors like prediction quality, confidence, robustness, and uncertainty is crucial. These insights aid application scientists in making informed decisions. However, DNNs lack inherent mechanisms to measure prediction uncertainty, prompting the creation of distinct frameworks for constructing robust uncertainty-aware models tailored to various visualization tasks. In this work, we develop uncertainty-aware implicit neural representations to model steady-state vector fields effectively. We comprehensively evaluate the efficacy of two principled deep uncertainty estimation techniques: (1) Deep Ensemble and (2) Monte Carlo Dropout, aimed at enabling uncertainty-informed visual analysis of features within steady vector field data. Our detailed exploration using several vector data sets indicate that uncertainty-aware models generate informative visualization results of vector field features. Furthermore, incorporating prediction uncertainty improves the resilience and interpretability of our DNN model, rendering it applicable for the analysis of non-trivial vector field data sets.