Towards Real-Time Speech Segmentation for Glanceable Conversation Visualization

Shanna Li Ching Hollingworth - University of Calgary, Calgary, Canada

Wesley Willett - University of Calgary, Calgary, Canada

Room: Bayshore II

2024-10-14T16:00:00ZGMT-0600Change your timezone on the schedule page

2024-10-14T16:00:00Z

Abstract

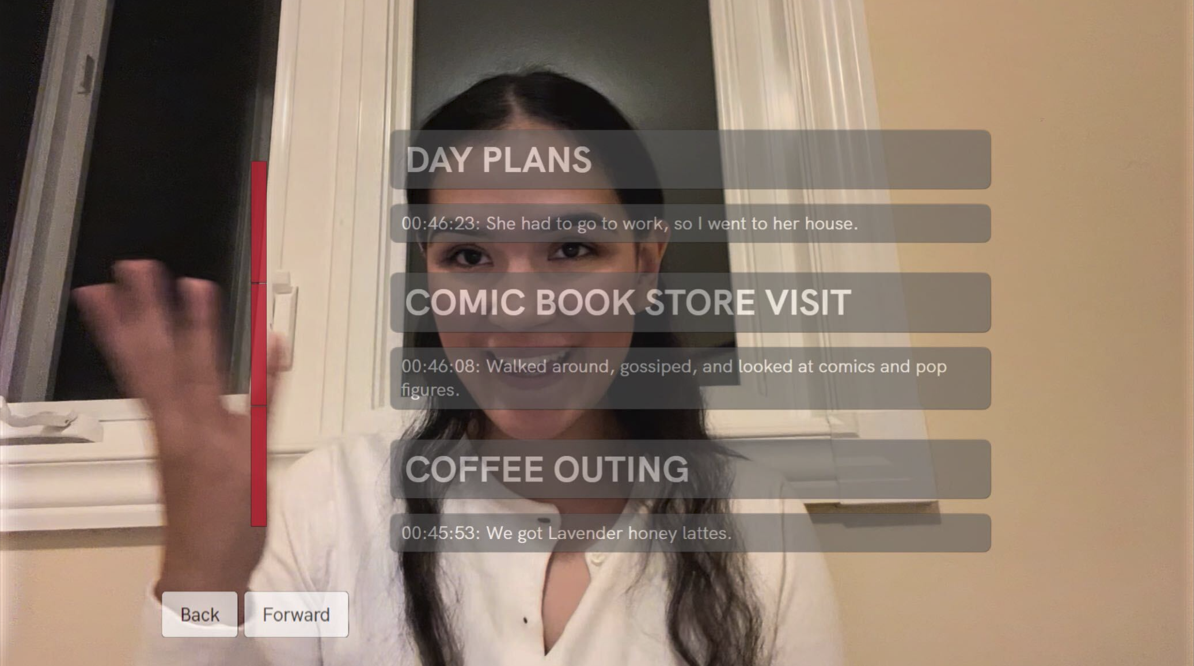

We explore the use of segmentation and summarization methods for the generation of real-time conversation topic timelines, in the context of glanceable Augmented Reality (AR) visualization. Conversation timelines may serve to summarize and contextualize conversations as they are happening, helping to keep conversations on track. Because dialogue and conversations are broad and unpredictable by nature, and our processing is being done in real-time, not all relevant information may be present in the text at the time it is processed. Thus, we present considerations and challenges which may not be as prevalent in traditional implementations of topic classification and dialogue segmentation. Furthermore, we discuss how AR visualization requirements and design practices require an additional layer of decision making, which must be factored directly into the text processing algorithms. We explore three segmentation strategies -- using dialogue segmentation based on the text of the entire conversation, segmenting on 1-minute intervals, and segmenting on 10-second intervals -- and discuss our results.